Opinion Detection by Transfer Learning

11-742 Information Retrieval Lab

Instructor: Professor Yiming Yang

Grace Hui Yang (huiyang@cs.cmu.edu)

Abstract

Opinion detection is the main task of TREC 2006 Blog track, which identifies opinions from text documents in the TREC blog corpus. Given that it is the first year of the task,training data provided. Using knowledge about how people give opinions on other domains,opinion detection in blog domain. This work describes how to apply transfer learning in opinion detection. A Bayesian logistic regression framework is used and knowledge from training data in other domains is captured by a non-diagonal prior covariance matrix. The experimental results show that the approach is effective and achieve an improvement of 32% from baseline.

Timeline

| Task | Done By | Status | Codes/Documents |

| Project Proposal and Work Plan | Feb 10, 2007 | Complete | this webpage |

| Logistic Regression Toolkit with Multivariant Gaussian Prior | Feb 16, 2007 | Complete | matlab codes |

| Construct Word Feature Matrix by Wordnet Synset and Word Polarity | Feb 23, 2007 | Complete | perl codes and wordnet synset pairs |

| Optimize Covariance Matrix by Semi-definite Programming | Mar 9, 2007 | Complete | matlab codes |

| Logistic Regression Toolkit with Informative Prior | Mar 16, 2007 | Complete | matlab codes |

| Experiments with TREC 2006 Blog data | Mar 23, 2007 | Complete | See related sections in report |

| Report | April 12, 2007 | Complete | pdf download |

| Presentation | April 17, 2007 | Complete | ppt download |

Introduction

Opinion detection is an emerging topic that attracts more and more research interests from researchers in data mining and natural language processing. Given a document, opinion detection task identifies and extracts the opinionated expressions for a certain topic. Some opinions expressed in a general way as in "I really like this work", hence words with sentiment polarity are playing an important role to recognizing the presence of an opinion. On the other hand, there are many opinions have its own way to express, for example, "Watching the film is like reading a times portrait of grief that keeps shifting focus to the journalist who wrote it". Given the great variety and complexity of human language, opinion detection is a challenging job.

In year 2006, Text REtrieval Conference (TREC) started a new track to study research topics in the blog domain, and opinion detection in blogs is the main task. Since it is the first year and blog data is pretty new in the research community, there is a lack of training data. Given the lack of training data from blog corpus, simple supervised learning is not possible. How to transfer knowledge about opinions from other domains, which have labelled training data, is another challenge.

This project gives a try to use techniques in transfer learning to incorporate common features for opinion detection across different domains to solve the problem of no training data. Bayesian Logistic Regression is the main framework used. The common knowledge is formed into a non-diagonal covariance matrix for the prior of regression coefficients. The learned prior from movie and product reviews is used to estimate whether a sentence is an opinion or not in the blog domain. Moreover, different from classic text classification task, opinion detection has its own effective features in the classification process. This paper also describes "Target-Opinion" word pairs and word synonyms and their effects on opinion detection.

Literature Review

Opinion Detection

Researchers in Natural Language Processing (NLP) community are the

pioneers for the opinion detection task. Turney

Turney (2002) groups online words whose point mutual

information is close to two words - "excellent" and "poor", and

then use them to detect opinions and sentiment polarity. Riloff

and Wiebe (Riloff & Wiebe 2003) use a high-precision classifier

to get high quality opinion and non-opinion sentences, and then

extract surface text patterns from those sentences to find more

opinions and non-opinions and repeat this process to bootstrap.

Pang et al. (Pang et al. 2002) treated opinion and sentiment

detection and as a text classification problem and use classical

classification methods, like Naive Bayes, Maximum Entropy, Support

Vector Machines, with word unigram to predict them. Pang and Lee

We participated in TREC-2006 Blog track evaluation. The main task

is opinion detection in blog domain. The system

(Yang et al. 2006) is mainly divided into two parts: passage

retrieval and opinion classification. During passage retrieval,

the topics provided by NIST are parsed and query expansion is done

before sending the topics as queries to the Lemur search

engine (http://www.lemurproject.org/). Documents in

the corpus are segmented into passages around 100 words and are

the retrieval units for the search engine. The top 5,000 passages

returned by Lemur are then sent into a binary text classification

program to classified into opinions and non-opinions based the

average over their sentence-level subjectivity score. The

performance of the system is among top five participated groups.

Transfer learning is to learn from other related tasks and apply

the learned model into the current task. The most general form of

transfer learning is to learning the similar tasks from one domain

to another domain so that transfer the "knowledge" from one to

another. In the early research of transfer learning, Baxter

(Baxter 1997) and Thrun (Thrun 1996) both used

hierarchical Bayesian learning methods to tackle this problem.

Ando and Zhang (Ando & Zhang 2005) proposed a framework for

Gaussian logistic regression with transfer learning for the task

of classification and also provided a theoretical prove for

transfer learning in this setting. They learned from multiple

tasks to form a good classifier and apply it onto other similar

tasks. Raina et al. (Raina et al. 2006) continued this approach and

built informative priors for gaussian logistic regression. These

informative priors actually corresponds to the hyper-parameter in

other approaches. We follow closely with Raina et al. approach

and adapt it into the opinion detection task.

Transfer Learning

The Algorithm

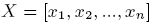

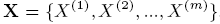

, where n is the total number

of word features. The entire dataset is

represented

as

, where n is the total number

of word features. The entire dataset is

represented

as  , where

m is the total number of sentences. A class

label for a sentence is either "opinion" or

"non-opinion", and is represented by

Y={0,1}.

, where

m is the total number of sentences. A class

label for a sentence is either "opinion" or

"non-opinion", and is represented by

Y={0,1}.

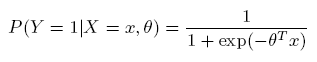

Logistic regression assumes sigmoid-like data distribution and predicts the class label according to the following formula:

To avoid overfitting, usually a multivariate Gaussian prior is added on

the regression coefficient. For simplicity, zero mean and equal

variance are assumed, which assumes the data are i.i.d.. However, this assumption is not true.

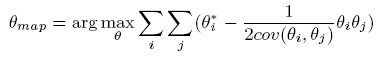

A

general prior with non-diagonal covariance

is used in this research. The

MAP estimation becomes:

is used in this research. The

MAP estimation becomes:

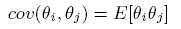

To apply the above formula, it is required to get the covariance value for every pair of regression coefficients. Given that the prior mean is 0, the covariance of any two regression coefficients becomes:

Get Covariance by MCMC

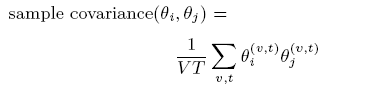

The covariance for pair-wised regression coefficients can be obtained by Markov Chain Monte Carlo (MCMC) method. Instead of real covariance, which is not going to be achieved but can be closely estimated by the sample covariance. MCMC suggests to sample several small vocabularies with the two words. Each small vocabulary is used as training data to train an ordinary logistic regression model. The sample covariance is obtained by going through words in each training set and vocabulary.

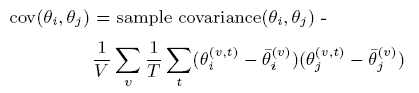

Hence the covariance is due to both randomness of vocabularies and training sets. However, only the covariance due to vocabulary change is desired in our case. Hence a correction step is performed through minus a bootstrap estimation of the covariance due to randomness of training set change.

By doing the above calculation, the covariances of each pair of regression coefficient is able to be obtained. However, given that the number of regression coefficients is corresponding to the number of word features, the total amount of computation is huge and not feasible. Therefore, a smarter way of calculating just a small amount of pair-wise covariances is necessary.Moreover, individual pair-wise covariances can only be used to estimate relationship between two words, however, what is needed is to estimate relationship among all the words. In another word, a covariance matrix is the final target to learn.

Learning a Covariance Matrix

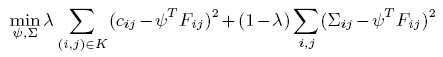

It is extremely inefficient to calculate every pair of individual covariances for all word pairs. Instead, learning indirect underlying features and representing the word features as those features will dramatically reduce the amount of computations. In this way, only a small fraction of word pairs need to be calculated their pair-wise covariances. And the rest of word pairs covariances can be estimated by a transformation from their indirect features. Therefore, the problem of learning individual covariance for each word pair is turned into the problem of learning the correspondence between an underline common feature, which will be shared by many word pairs, and a word pair itself. Mathematically, if the indirect common features are defined as a feature vector Fij.

A valid covariance matrix needs to be positive semi-definite

(PSD), which is a Hermitian matrix with all of its eigenvalues

nonnegative. In other words, it needs to be a square, self-adjoint

matrix with nonnegative eigenvalues. Clearly, the individual

pair-wise covariances obtained are not going

to form such a matrix automatically.

Hence, a

projection from the original covariances to a PSD cone is

necessary to make the matrix usable. Therefore, the covariance

matrix should be as close to a PSD matrix  as possible.

as possible.

We can solve the above two problems in a joint minimization fashion. Therefore, the overall objective function can be represented as:

is the trade-off coefficient

between the two sub objectives and it is set to 0.6 in our experiments.

The joint optimization problem in the above equation can be

solved in an minimization-minimization procedure by fixing one

argument and minimizing on another. In our case, alternatively,

is the trade-off coefficient

between the two sub objectives and it is set to 0.6 in our experiments.

The joint optimization problem in the above equation can be

solved in an minimization-minimization procedure by fixing one

argument and minimizing on another. In our case, alternatively,

is minimized over when

is minimized over when

is fixed, and

is fixed, and

is minimized over when

is minimized over when

is fixed. When minimizing over

is fixed. When minimizing over

, quadratic programming (QP) is

sufficient. There are many QP sovlers\footnote{We used the Sedumi

QP solver (http://sedumi.mcmaster.ca) in the Yalmip

(http://control.ee.ethz.ch/~joloef/yalmip.php) package. available

and can be easiliy obtained. When minimizing over

, quadratic programming (QP) is

sufficient. There are many QP sovlers\footnote{We used the Sedumi

QP solver (http://sedumi.mcmaster.ca) in the Yalmip

(http://control.ee.ethz.ch/~joloef/yalmip.php) package. available

and can be easiliy obtained. When minimizing over

, this is a special semi-definite

problem (SDP), and can be easily done by performing

eigen-decomposition and keeping the nonnegative eigenvalues, which

can be done in any standard SDP solvers.

, this is a special semi-definite

problem (SDP), and can be easily done by performing

eigen-decomposition and keeping the nonnegative eigenvalues, which

can be done in any standard SDP solvers.

Since the above equation is convex, which can be proved, there is a global minimum existing. Therefore, the minimization-minimization procedure repeats the two minimization steps and continues until a guaranteed convergence.

Dataset

Opinion Detection Task

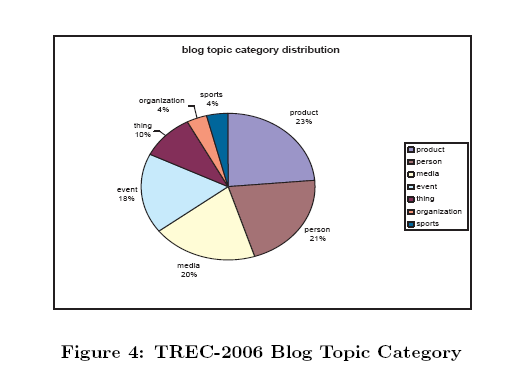

TREC 2006 Blog CorpusThe data are from TREC 2006 Blog corpus, which contains 3,201,002 blog articles (trec reports 3,215,171), is posted during the period of Dec 2005 to Feb 2006. The posts and the comments are from Technorati, Bloglines, Blogpulse and other web hosts.

Passage retrieval is performed to retrieve top 5,000 (or less than 5,000) passages for each of the 50 TREC topics. It gives 132,399 passages in total and 2,647.98 passages per topics in average.

Auxiliary Datasets- 10,000 movie review sentences, 5,000 positive, 5,000 negative (Pang and Lee, http://www.cs.cornell.edu/People/pabo/movie-review-data/ )

- 4,000+ Custom review sentences: 2,034 positive, 2,173 negative (Hu and Liu http://www.cs.uic.edu/~liub/FBS/FBS.html)

Experiments

(see related section in report for more detailes)Evaluation Metric

Precision at 11-pt recall level and Mean-average Precision (MAP) of top 1000 retrieved documents.

(Note that TREC evaluates in document level)

The answers are provided by TREC qrels.

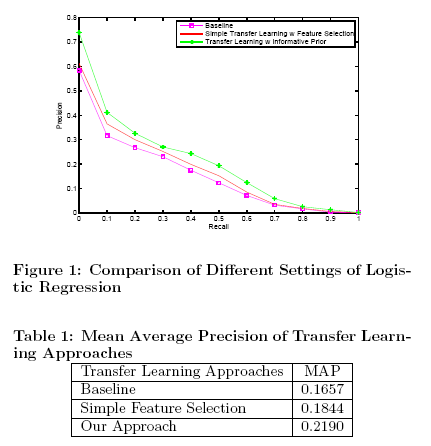

Experiment One: Effects of Using Non-diagonal Covariance Prior

This experiment compares the following three settings :

- Baseline: Using movie reviews to train the Gaussian logistic regression model with zero mean and equal variance. Vocabulary is unigram and bigrams from movie reviews. The model is directly tested on blog review data without any feature selection.

- Simple feature selection: Using movie reviews and product reviews to train the Gaussian logistic regression model with zero mean and equal variance. Vocabulary is the common unigram and bigrams from both domains. The model is test on blog review data.

- The proposed approach: Using movie reviews to calculate prior covariance, train the logistic regression model with the informative prior. Vocabulary is from the blog corpus and is different for each retrieval topic based on the unigram and bigrams in the 5,000 retrieved passages. The model is test on blog review data.

Figure 1 shows the precision at each recall level for the tested three approaches. As we can see here, the approach used in this research gives the best precision at all the 11-point recall levels. The simple feature selection method also performs better than the baseline system, which indicates that by removing the bias introduced by a single domain of data, the prediction accuracy of transfer learning is improved. It is also obvious that the current approach is a more advanced way of learning task-related common knowledge than just doing simple feature selection.

Table 1 shows the non-interpolated mean average precision of the 3 approaches. Based on previous research Raina et al. reported, the proposed approach could achieve an improvement of 20%-40% for text classification task. As for our task, we see an improvement of 32% on non-interpolated mean average precision from the baseline to the current approach. Both experiments in opinion detection and text classification show that construct non-diagonal prior covariance matrix to incorporate the external knowledge is a good way to boost the performance of gaussian logistic regression for transfer learning.

Experiment Two: Effective Underlying Features for Opinion Detection

Target-opinion word pairs and Wordnet synonyms are two main features used in this project. It is reported that Wordnet synset feature is very effective for text classification task (Raina et al 2005, Ando & Zhang 2005), by just using that, a 20%-40% improvement on text classification could be observed.

Due to that opinion detection is using text classification techniques, so that it should be able to observe the similiar effects. However, opinion detection is not purely text classification, it is not topic-wised classification, but a binary classification of opinions or non-opinions. Therefore, Wordnet synset feature may not effective to our task. We introduce a specific feature specially designed for the task of opinion detection, which is "Target-Opinion" word pairs. Each opinion is about a certain target, and this target usually has its own customary way to expression the opinion about it. There is a clear relationship between the target and the opinion about it. Is this a good feature as what we expected?

Figure 2 shows the results of an experiment which compares the three cases of using just Wordnet synset to create informative prior, using just target-opinion pairs to create informative prior and using both of them. It can be seen that applying the proposed approach with "Target-opinion" pair as the single feature is doing better than using Wordnet synset alone. When both features are used to construct the informative prior covariance, MAP reaches the best performance which the current approach in this research can achieve.

Table 2 shows that using target-opinion pair alone, there is a 27% improvement as compared to the baseline and 10% more improvement as compared to using Wordnet synset alone. It proves that our hypothesis is correct. "Target-opinion" feature is more suitable for the task of opinion detection. Wordnet synset feature also contributes to the improvement of overall performance, but sometimes, for example at recall level 0.3 in Figure 2, there is no improvement from baseline to using Wordnet synset alone. It is not saying that this is a bad feature, but give us a hint that sometimes, Wordnet synset will not always be effective for the task of opinion detection.

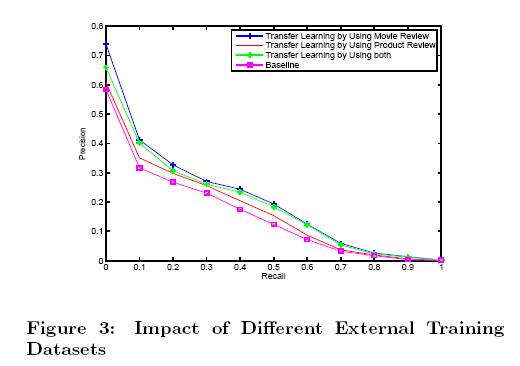

Experiment Three: Single Auxilliary Dataset vs. Multiple Auxilliary Datasets

In our TREC-2006 submission, we selected common unigram and bi-gram features from both movie review and product review domains, with the belief that the intersection part could capture the common features across different domains as long as the task is the same, in this case, opinion detection. It is natural to extend this thought to apply it into the approach used in this research, i.e., using both movie reviews and product reviews to train the Gaussian logistic regression model and also using both of them to generate prior.

Figure 3 shows the mean average precision at 11-point recall level for applying current approach with different external datasets. Surprisingly, using movie domain alone gives the best performance. Using product reviews to train the model results a performance drop as compared with using both domains, which not show an additive improvement as we expected. In this case, the negative effect of transfer learning is observed. It tells us that even transfer learning is effective, but sometimes it will not help much if a bad external training dataset is selected.

In our case, blog domain (target domain) covers more general topics as shown in Figure 4, movie domain (training domain) talking about mainly movies, but also talking about the people, objects, organizations in the movie, and hence matches blog domain better. On the other hand, product domain concentrates on customer reviews about several electronic products, it only helps a certain type of topics in blog opinion detection, not all of them. The experiment tells us that selecting a good external dataset is very important to avoid negative effect of transfer learning.

Conclusion

This paper describes a transfer learning approach which incorporates common knowledge for the same task from external domains as a non-diagonal informative prior covariance matrix. It brings a way to solve the problem of lacking of enough training data or even no training data from the target domain.

The approach is adapted to the task of opinion detection, which is a very interesting research topic recently. In our TREC-2006 system, opinion detection is separated into two sub-tasks, passage retrieval and text classification. Passage retrieval engine searches passages related to the query topics and return them by the confidence score. Text classification is a binary classification problem, either opinion or non-opinion. Sentences are the unit to perform this classification. Gaussian Logistic Regression is used as the general framework. In the proposed approach, an informative prior covariance matrix is constructed by incorporating external knowledge of "Target-Opinion" word pairs and Wordnet synset information. The results shown in the experiments prove that this is an effective approach with the fact that it achieves an 32% mean average precision improvement over baseline.

There are two main contributions of this work to the general communities of machine learning and opinion detection: first, solve the problem of with no labelled training data how to performing opinion detection for certain domains, second, study and extend transfer learning to opinion detection and explore important features for this task.

The future work will be a natural extension of the current work. In the experiment about the effect of different external datasets, we found that different datasets actually help the precision of opinion detection of different blog topics. Therefore, if we do blog topic classification and then use different external datasets as training data for each topic category, a greater improvement from the baseline should be observed.

References

- L. L. B. Pang and S. Vaithyanathan. Thumbs up? sentiment classifiction using machine learning techniques. In proceedings of 2002 conference on Empirical Methods in Natural Language Processing. EMNLP, 2002.

- J. Baxter. A bayesian/information theoretic model of learning to lear via multiple task sampling. In Machine Learning. Machine Learning, 1997.

- L. L. Bo Pang. A sentimental education: Sentiment analysis using subjectivity summarization based on minimum cuts. In proceedings of ACL 2004. ACL, 2004.

- R. C. B. J. F. M. B. M. C. C. F. J. G. S. H. M. A. H. G. H. D. A. J. R. K. K. T. K. S. L. C. L. G. A. M. K. J. M. D. M. N. N. U. P. P. R. D. S.-O. R. T. R. P. v. d. R. E. V. Christiane Fellbaum, Reem Al-Halimi. WordNet: An Electronic Lexical Database. MIT Press, May 1998.

- J. W. E. Riloff Learning extraction patterns for subjective expressions. In proceedings of the 2003 conference on Empirical Methods in Natural Language Processing. EMNLP, 2003.

- J. C. Hui Yang, Luo Si. Knowledge transfer and opinion detection in the trec2006 blog track. In Notebook of Text REtrieval Conference 2006. TREC, Nov 2006.

- C. M. G. M. I. S. Iadh Ounis, Maarten De Rijke. Overview of the trec-2006 blog track. In Notebook of Text REtrieval Conference 2006. TREC, Nov 2006.

- A. S. K. Yu, V. Tresp. Learning gaussian processes from multiple tasks. In Proceedings of ICML 2005. ICML, 2005.

- J. C. P. N. D. Lawrence. Learning to learn with the informative vector machine. In Proceedings of ICML 2004. ICML, 2004.

- T. Z. R. Ando. A framework for learning predictive structure from multiple tasks and unlabeled data. ACM Jounal of Machine Learning Research, May 2005.

- A. Y. N. Rajat Raina and D. Koller. Transfer learning by constructing informative priors. In Proceedings of the Twenty-second International Conference on Machine Learning. ICML, March 2006.

- K. T. T. F. S. Morinaga, K. Yamanishi. Mining product reputations on the web. In Proceedings of SIGKDD 2002. SIGKDD, 2002.

- S. Thrun. Is learning the n-th thing any easier than learning the first? In Proceedings of NIPS 1996. NIPS, 1996.

- P. D. Turney. Thumbs up or thumbs down? semantic orientation applied to unsupervised classification of reviews. In Proceedings of ACL 2002. ACL, July 2002.

Copyright © 2007 Grace Hui Yang and Prof. Yiming Yang.

Last modified: April 18, 2007. 19:36:41 pm